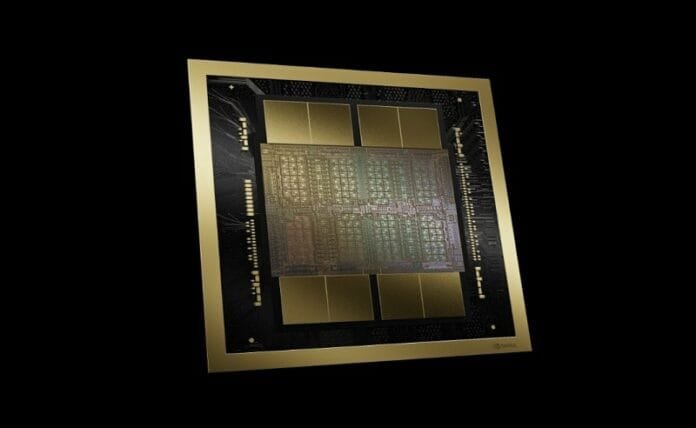

Nvidia has announced the Blackwell B200 GPU, branding it as the “world’s most powerful chip” for AI. This next-generation GPU platform is a significant leap in computing technology, featuring 208 billion transistors and is built using a custom 4NP TSMC process.

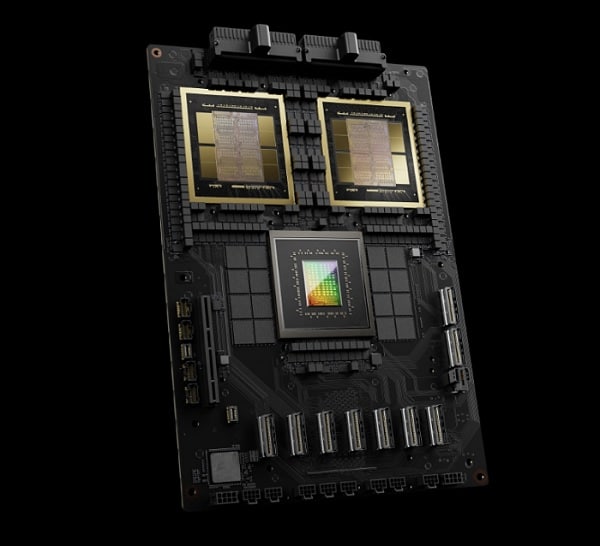

The Blackwell B200 is notable for its chiplet design, utilizing two compute dies, and is poised to deliver unparalleled performance metrics, including 20 PFLOPS in both FP8 and FP6, and a staggering 40 PFLOPS in FP4, marking a fivefold increase over its predecessor, Hopper.

The Blackwell platform comprises various cutting-edge technologies, such as a second-generation transformer engine, fifth-generation NVLink for high-speed GPU communication, and advanced features for secure AI and decompression, tailored for processing and accelerating huge AI models and datasets.

Nvidia’s Blackwell GPUs, including the B200 model, are expected to revolutionize AI computing, offering substantial improvements over previous models in terms of speed, efficiency, and capacity.

Furthermore, these GPUs will be widely available through a global network of partners, including major cloud service providers like AWS, Google Cloud, and Microsoft Azure. They will also be integrated into a variety of servers offered by major manufacturers such as Cisco, Dell, and Lenovo, further broadening their impact on the field of AI and computing at large.

Nvidia’s unveiling of the Blackwell B200 GPU and its associated platform signifies a major advancement in AI technology, promising to empower developers and researchers with the tools to push the boundaries of what’s possible in machine learning, data analysis, and beyond.

key Features of the Nvidia Blackwell B200 GPU

Here are the key features of the Nvidia Blackwell B200 GPU, distilled into bullet points:

- Chiplet Design: The B200 utilizes a chiplet architecture, featuring two compute dies for enhanced performance and efficiency.

- TSMC 4nm Process Node: Built on TSMC’s advanced 4nm process, allowing for high transistor density and energy efficiency.

- 208 Billion Transistors: The GPU boasts 208 billion transistors, offering massive computational power.

- 160 Streaming Multiprocessors (SMs): With 20,480 cores, it provides substantial parallel processing capabilities.

- 192 GB HBM3e Memory: Incorporates eight stacks of 8-hi HBM3e modules, ensuring fast and vast memory capacity.

- 8 TB/s Memory Bandwidth: Delivers exceptional data transfer rates, facilitating quick access to large datasets.

- 700W Peak TDP: High power consumption reflects its high-performance capabilities.

- Support for PCIe 6.0: Ensures compatibility with the latest PCIe standard for high-speed data transfer.

- Advanced NVLINK Interconnect: Facilitates high-speed communication between GPUs, crucial for complex AI models and simulations.

These features position the Blackwell B200 GPU as a pivotal component in Nvidia’s next-generation AI and computing platforms, designed to meet the demands of increasingly complex AI models and computational task.

Availability

The Nvidia Blackwell B200 GPU and related products will be widely available starting later this year through a robust network of partners, ensuring that a diverse range of consumers, from cloud service providers to enterprises, will have access to these advanced computing resources. Here’s a summary of its availability:

- Cloud Service Availability: Leading cloud service providers, including AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure, are among the first to offer Blackwell-powered instances. This broad availability across major cloud platforms means that developers and enterprises worldwide will have easy access to the advanced capabilities of Blackwell GPUs for their AI and computing needs.

- NVIDIA DGX Cloud: The B200 will also be available on NVIDIA DGX Cloud, an AI platform co-engineered with leading cloud service providers. This platform gives enterprise developers dedicated access to the infrastructure and software needed to build and deploy advanced generative AI models, facilitating cutting-edge AI research and development.

- Server and Hardware Partners: A wide range of servers based on Blackwell products are expected to be delivered by major hardware manufacturers such as Cisco, Dell, Hewlett Packard Enterprise, Lenovo, and Supermicro. Additionally, companies like Aivres, ASRock Rack, ASUS, and others will provide various servers incorporating Blackwell GPUs, ensuring broad availability for different market segments and applications.

- Software Ecosystem Support: The Blackwell GPUs will also be supported by a growing network of software makers, including Ansys, Cadence, and Synopsys. These partnerships are crucial for accelerating software applications in engineering simulation, electrical, mechanical, and manufacturing systems design, leveraging the power of Blackwell-based processors for faster product development and higher efficiency.

The strategic partnerships with cloud providers, hardware manufacturers, and software developers underscore Nvidia’s commitment to making Blackwell-based technology accessible to a wide range of users, from cloud computing to enterprise data centers and beyond.

These collaborations will not only enhance the availability of Blackwell GPUs but also ensure they are integrated into solutions that meet the diverse needs of modern computing, AI research, and development.